Gaussian3Diff adopts 3D Gaussians defined in UV space as the underlying 3D representation, which intrinsically support high-quality novel view synthesis, 3DMM-based animation and 3D diffusion for unconditional generation.

Gaussian3Diff adopts 3D Gaussians defined in UV space as the underlying 3D representation, which intrinsically support high-quality novel view synthesis, 3DMM-based animation and 3D diffusion for unconditional generation.

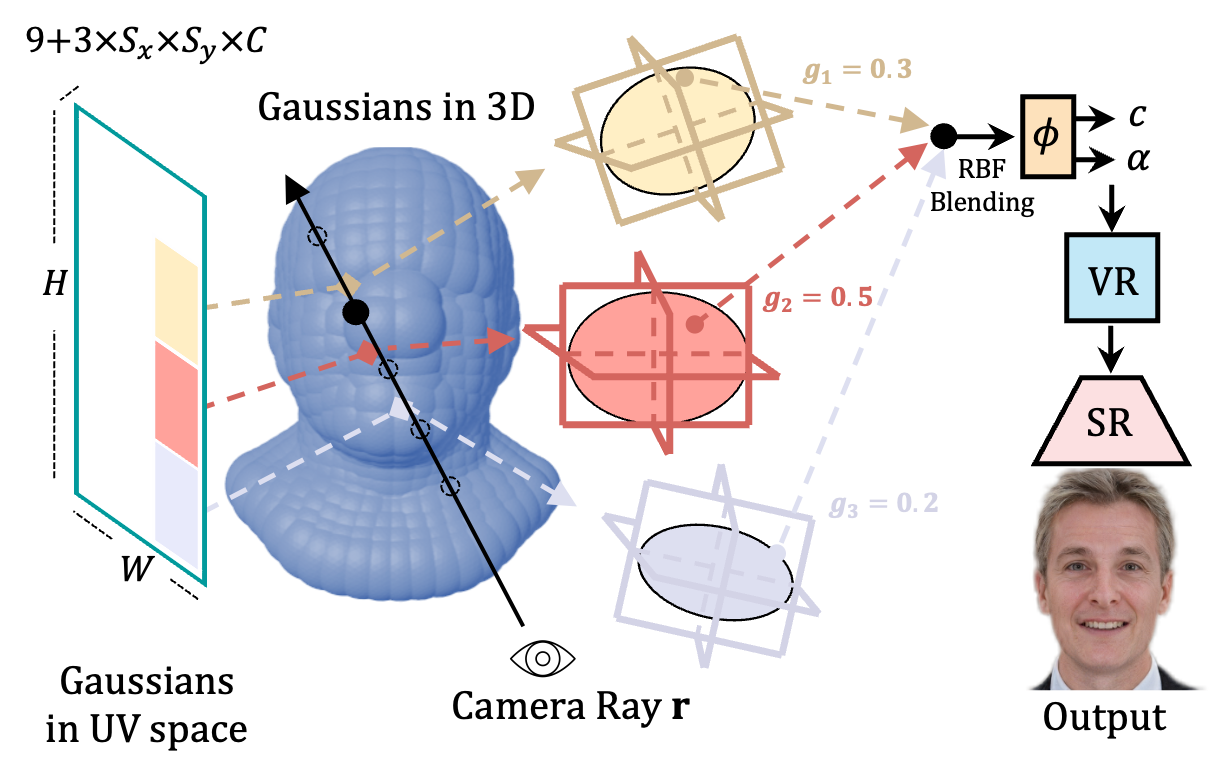

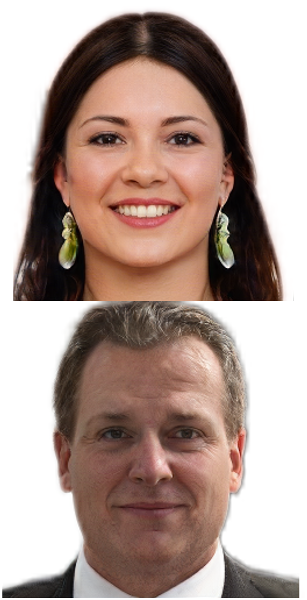

We present a novel framework for generating photorealistic 3D human head and subsequently manipulating and reposing them with remarkable flexibility. The proposed approach leverages an implicit function representation of 3D human heads, employing 3D Gaussians anchored on a parametric face model. To enhance representational capabilities and encode spatial information, we embed a lightweight tri-plane payload within each Gaussian rather than directly storing color and opacity. Additionally, we parameterize the Gaussians in a 2D UV space via a 3DMM, enabling effective utilization of the diffusion model for 3D head avatar generation. Our method facilitates the creation of diverse and realistic 3D human heads with fine-grained editing over facial features and expressions. Extensive experiments demonstrate the effectiveness of our method.

We propose to anchor 3D Gaussians on the 3DMM UV space with tri-plane payloads, which allows us to decouple the underlying geometry from the complex volumetric appearance. Additionally, this representation is efficient in rendering and natually support diffusion training.

Gaussian3Diff adopts 3D Gaussians defined in UV space as the underlying 3D representation, which intrinsically support high-quality novel view synthesis, 3DMM-based animation and 3D diffusion for unconditional generation.

We showcase the reenactment comparison of our method against IDE-3D. Given IDE-3D's segmentation-based nature, utilizing a reference identity with a different shape unavoidably leads to change in the face layout. Nevertheless, Gaussian3Diff integrates a disentangled identity and expression modeling, facilitated by the underlying 3DMM, ensuring the preservation of the input identity through the reeactment process.

For diffusion-based inpainting, we provide either the geometry part or the texture part (on the UV space) of the upper face as hints, and let the diffusion model in-paints the remaining. As can be seen, the diffusion-inpainted results with texture masks showcase an identical upper-face texture, encompassing features such as hair and forehead color, while varying in shape. Conversely, the geometry-masked inpainting results exhibit an identical upper-face shape, including the layout of the eyes and forehead, but with different textures.

We present the interpolation from source to the target separately for both texture and geometry. Leveraging the inherent disentanglement between texture and geometry, Gaussian3Diff inherently supports individual modifications to each component. Furthermore, the intermediate results exhibit a high degree of plausibility.

Here, we reenact 2 random sampled source inputs onto the targets by driving the target Gaussians through the adjustment of the inner 3DMM mesh using the shape and expression codes from the source. The reenactment results preserve their original texture while adapting to the shape and expression of the source inputs.

Our work is inspired by the following work:

LDIF, Nerflets and 3D Gaussian Splatting introduce an idea to represent 3D scenes with local Gaussians.

Panohead can synthesize full 3D head and serve as our data source.

DDF propose the region-based texture-geometry transfer across NeRF and inspired our applications.

@inproceedings{lan2023gaussian3diff,

author={Lan, Yushi and Tan, Feitong and Qiu, Di and Xu, Qiangeng and Genova, Kyle and Huang, Zeng and Fanello, Sean and Pandey, Rohit and Funkhouser, Thomas and Loy, Chen Change and Zhang, Yinda},

title={Gaussian3Diff: 3D Gaussian Diffusion for 3D Full Head Synthesis and Editing},

year={2024},

booktitle={ECCV},

}